Sylvia

Meeting the Machine in the Middle

Panopticon

"Meeting the Machine in the Middle" (2019)

Domestic Daikon

Work Type : #Installation #Collaborative #Light

Materials: Found objects / Light Tubes

Made in collaboration with Deming Chen and Longxin Chen

“Domestic Daikon”, which means “domestic-grown white radish” in Japanese, is a light installation made of ready-made: Using traffic cones as the base, the installation was filled with LED light tubes and food trash: empty drinking bottles, food packing boxes and plastic bags. All the ready-made materials were hung with fishing lines, all together constituting the look of ice-cream cones.

Trashed, dirty ready-mades together compose a sweet, shining and dreamy figure, “Domestic Daikon” gets its inspiration from a contradiction I’ve observed during my two-week residency in Yokohama, Japan: From the one side, streets in Japan, even with so few public trash cans, are impressively clean. There are very strict rules for trash sorting, disposal and recycle. Littering could receive heavy punishment including five years in jail, or fine up to 100 thousand USD. But from the other side, we realize there is no such thing as plastic bag usage charge. And foods in Japan are usually over-packing: 3-4 plastic bags could be handed out to customers every time you shop in convenience shops like Family Marts or 7-11. During the residency we rely heavily on convenience shops to buy foods and drinks. After one and half week, even we had been frequently taking out trash, we were left with huge amount of empty bottles, food packing papers, and so forth. To commenting on this situation, we took use of the trash and created three giant “ice-creams” using these trashes.

CN:

「国产大根」,即中文中的”国产白萝卜“是我在2019年1月在日本横滨黄金町艺术社区驻地两周时,与另外两位一同驻地的福建籍艺术家共同完成的现成品灯光装置。它以三角锥路障为底座,填充LED灯管和空饮料瓶,吃完的食物包装盒和塑料袋,并用钓鱼线悬挂,搭建成三个巨型冰淇淋的外观,用废弃肮脏的现成品构成了一个甜蜜,闪耀而短暂的意象。

作品的灵感其实来源于两周之中我们观察到的一个矛盾: 一方面来说,日本的街道即使鲜有垃圾桶也是非常干净,居民社区或者便利店中的垃圾箱也都需要严格分类和定点放置,街上随处可见对于乱扔垃圾处以重罚(大概坐牢五年罚款五十万人民币)的标示。但另一方便来说,时常留恋便利店的我们发现,日本不但没有禁塑令,而且食物往往存在过度包装的现象,一次购物往往能拿回三四个塑料袋和一堆包装纸。两周的驻地里因为创作布展的任务繁重,三位艺术家几乎天天靠着几步路附近的便利店来解决吃饭问题,因而在一周半之后,我们意识到,即时我们已经很积极地分类清理,我们仍然积压了数量巨大的废弃生活垃圾。于是最后在合作创作作品时,我们就就地取材,将闲置在工作室中的大红三角路障倒置悬挂,用放置在中间的LED灯管照亮堆叠起来的生活垃圾,构成了三个巨大的“冰淇淋”。

作为日本短暂的访客,我们无意也无法以单一作品去深刻地剖析观察到的这一矛盾背后的深层社会机制。只是觉得整洁美丽的表象之下往往也隐藏着暗流涌动的问题所在,我们也只能暂时地将所见所闻转化为浅层地作品体现。正如这个作品的名字 「国产大根」,也只是在货架上偶尔瞥见白萝卜的商品标签,而留在心里的一个印象而已。

Dear Younger Me.

The Hand

Featured in:

- Huffington Post - "Fingers, Water, Tails - Tactile Tech at ITP"

- New York University PR Team -"ITP Winter Show 2016 Highlight"

Final Presentation

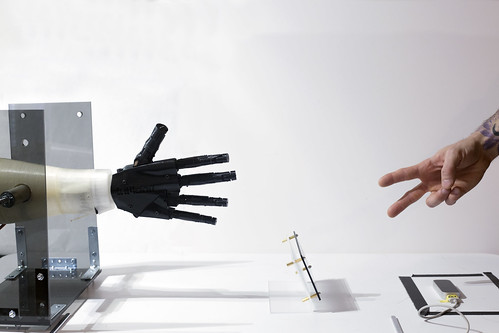

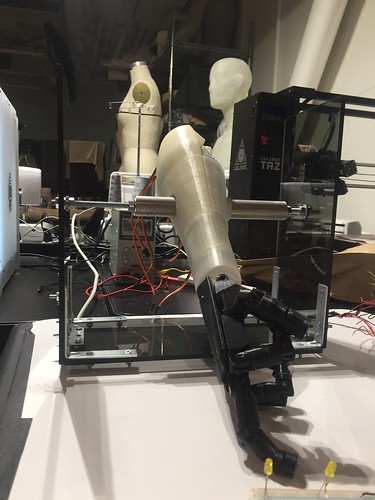

As the continuation of the midterm project , Tong and Nick decide to develop their ideas and design a RPS game robotic hand (and arm) that are more interactive and with more personalities. The final presentation for this project is an installation in which users could simply come and play with the RPS game robotic hand without wearing any extra devices.

Process Explanation

Documentations

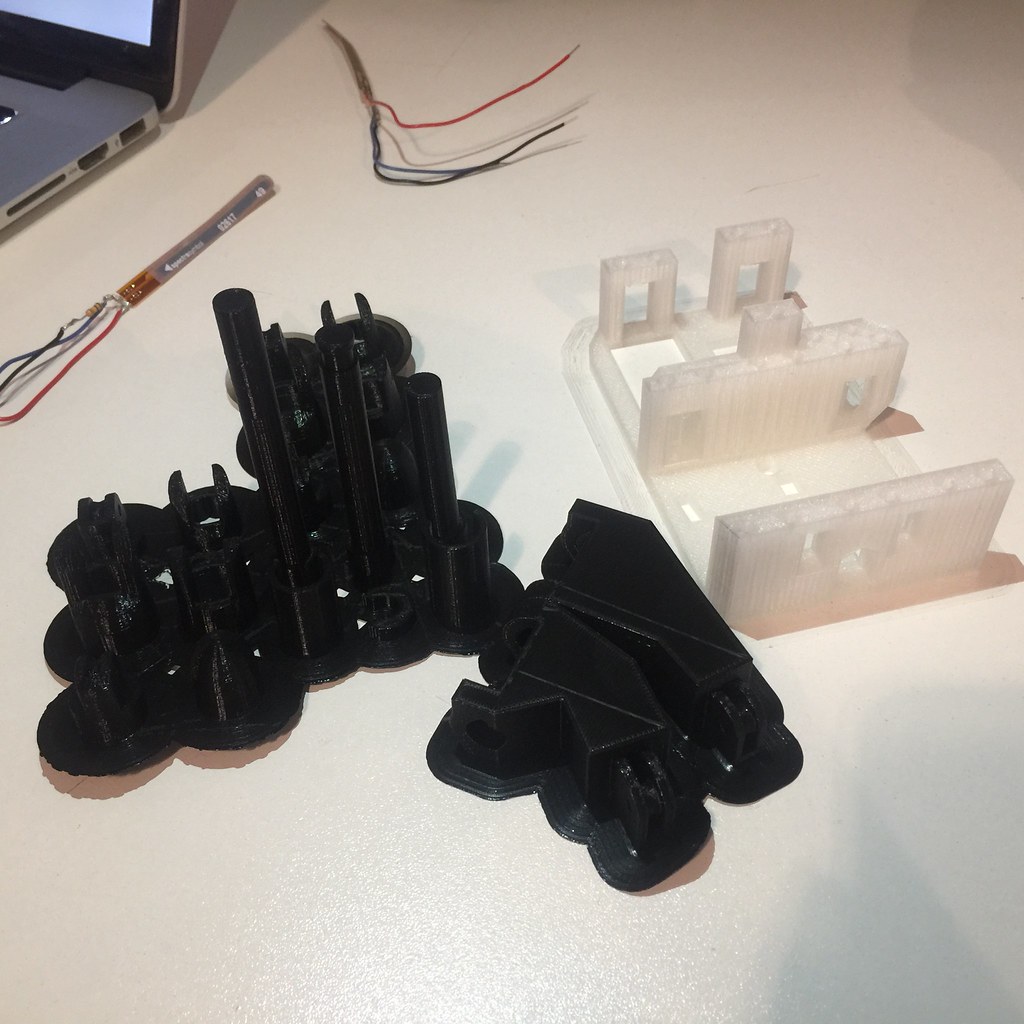

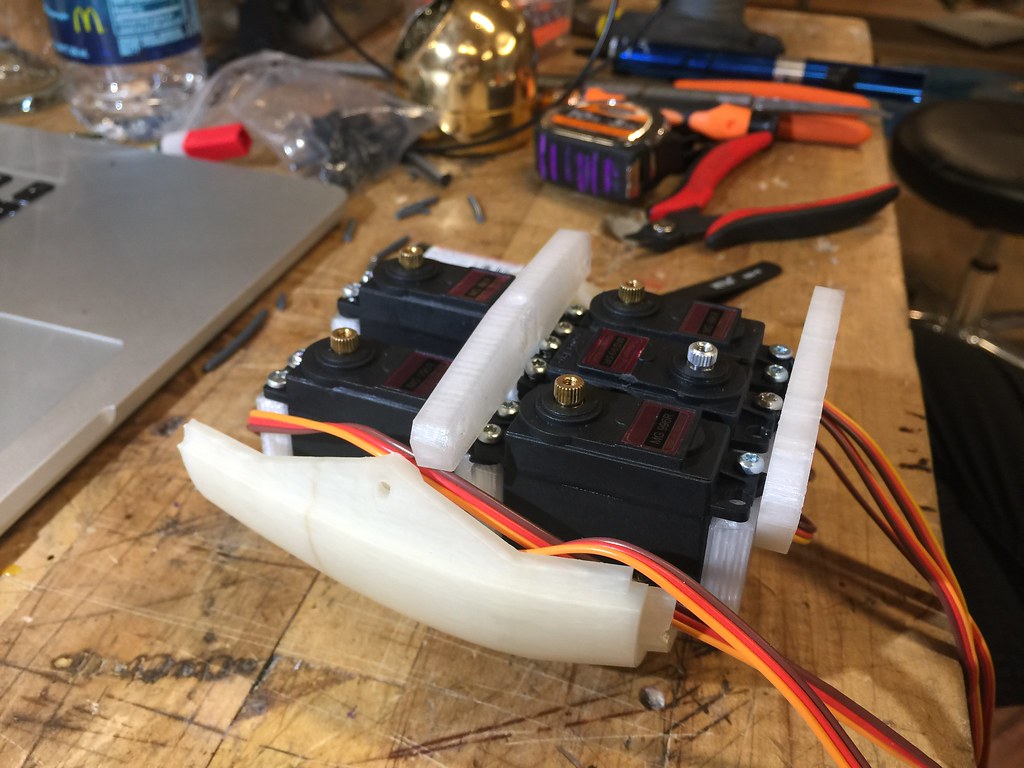

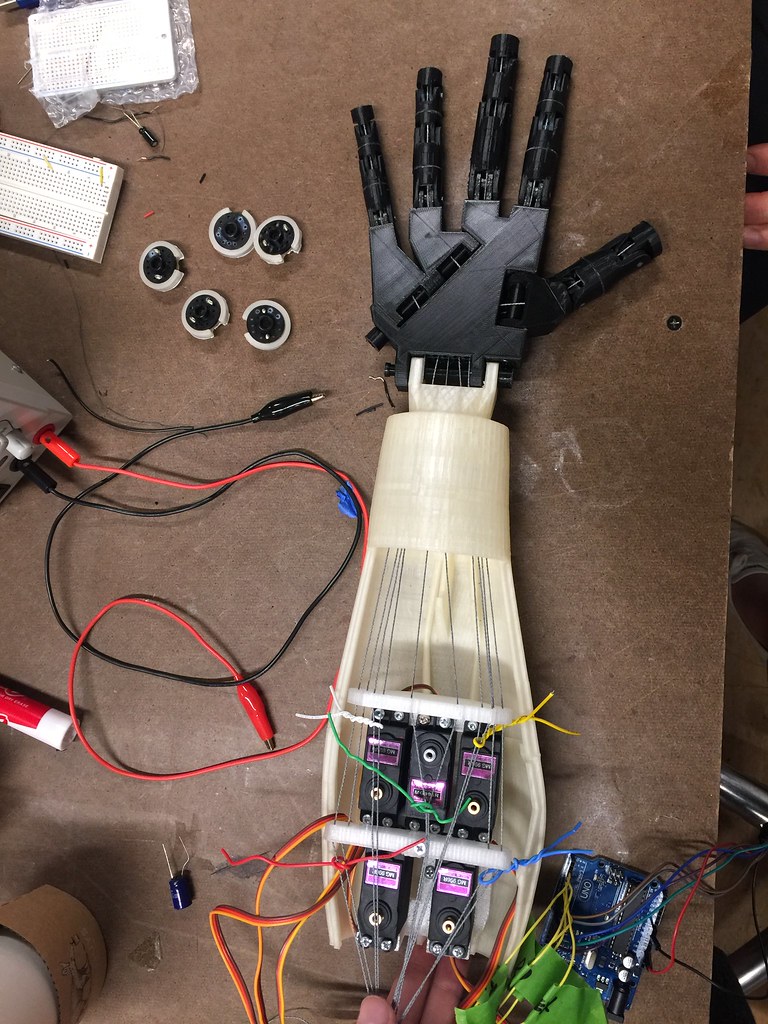

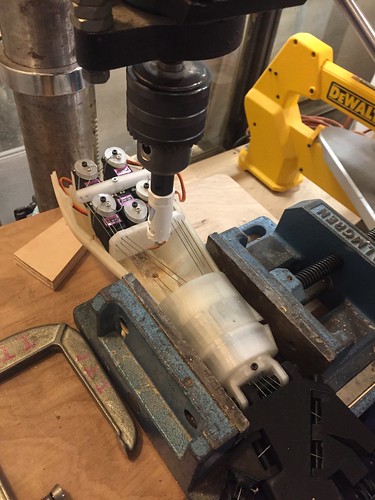

(1) 3D printed Robotic Hand & Forearm

To build the hand and the forearm of the robotic hand, we used the models from InMoov, an open source model library for 3D printed life-size robot. We used superglue and 3mm filaments to connect finger joints, 3d printed bolts to connect between the forearm and the hand, and used fishing lines and servos to control finger movements.(2) Robotic Hand & Forearm Assembly

The Orignial Version (Nov.2017):

(3) RPS Robotic Hand w/ Flex Sensitive Glove

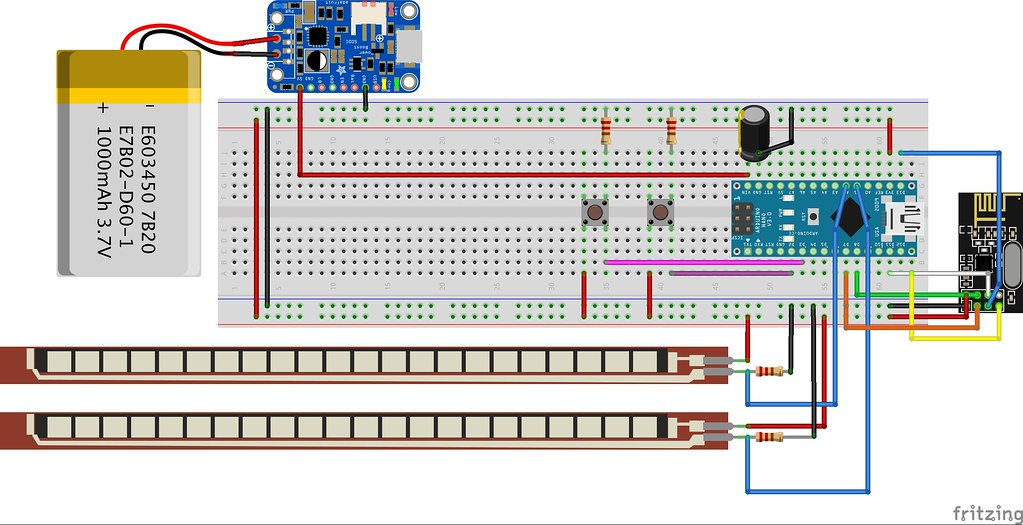

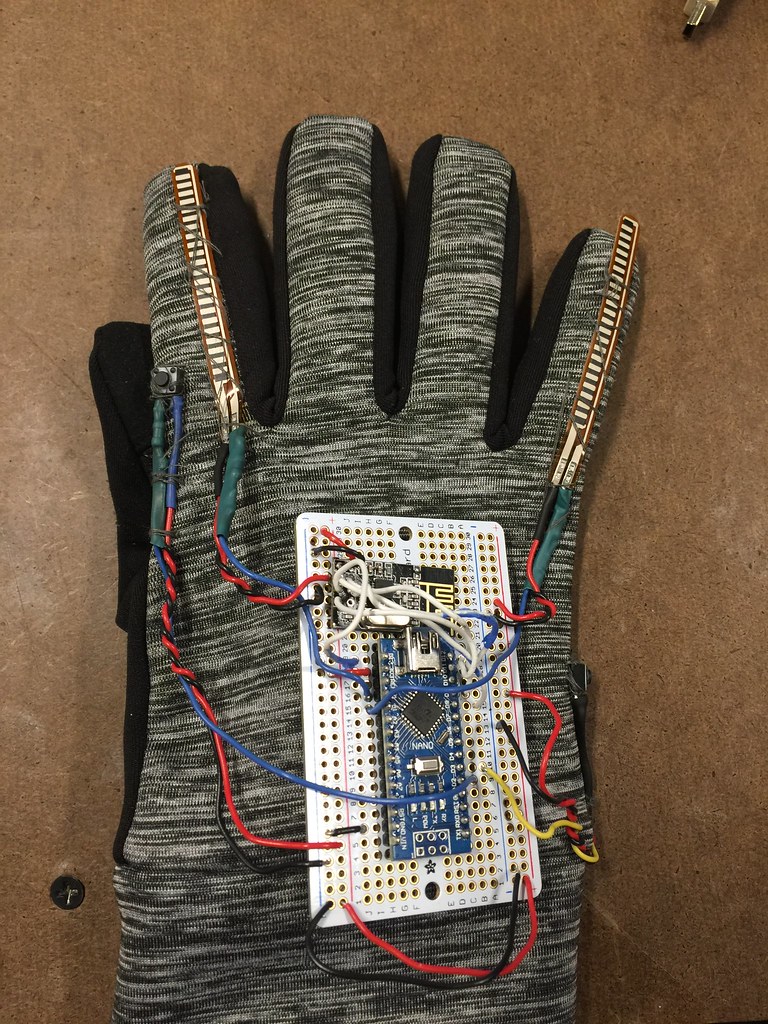

We designed a flex sensitive glove for this installation. There are two flex sensors, a "start the game" button, and a “throw” button, all sewed to a right-hand glove and connected to a Arduino Nano board, which has a nRF24L01 wireless transceiver that talks to the other same-kind transceiver from the robotic hand part.

To play the game, player would put on the glove, press the "start the game" button, then press the "throw" button twice, and make a hand gesture indicating his/her choice of rock, paper or scissors.

Final Version (December, 2017):

(4) Leap Motion

For better user experience, we use leap motion to replace the glove with flex sensors, which was in the previous version of the RPS game robotic hand. By doing so, users could simply activate the robotic hand by doing certain gestures such as waving.

(5)Re-design the forearm

We also redesign the cover for the wrist part and rearrange the motors, so a steel tube could run through the arm part, which allows the hand to be mounted inside an acrylic enclosure and move up and down freely.

Two other elements are added to the design: a gesture display board, and a set of LED lights. The gesture display board would light up the corresponding icon as what the user does during the game, which helps users to understand whether or not their gestures are detected correctly. And the set of LED lights would light up in turn when an user throws twice before doing a RPS gesture.

Flappy Shadow

"Flappy shadow" is a project by Kai Hung and Tong Wu that allows users to create and fly a virtual bird from their own shadow puppets.

Source Code at GitHub Page